FACS (Facial Action Coding System)

How we behave and perceive the world depends often on our emotions. For example, the product we’d choose from the shelf may be influenced by its funny or touching advertisement we saw on TV. That’s why, in the neuromarketing world, it’s so important to understand the emotions that the creation evokes.

How can we recognize the emotions?

To analyze emotions, we can naturally ask study participants about how they feel. But an even better idea would be to collect data about people's emotions without asking them a single question. To do so, we may use a webcam-based tool with the Facial Action Coding System implemented.

The Facial Action Coding System (FACS) is a system that allows the quantification and measurement of facial movements. It was initially developed by a Swedish anatomist - Carl-Herman Hjortsjö, and adopted by Paul Ekman and Wallace V. Friesen in 1978. Ekman and Friesen later updated the system in 2002. FACS encodes movements of individual facial muscles from different slight, instant changes in facial appearance.

There are seven universal emotions: fear, anger, sadness, disgust, contempt, surprise and enjoyment (happiness). It was discovered that even visually impaired/blind people show spontaneous facial expressions, hence, it’s innate, not visually learned. Using the mentioned system, coders can code almost any human facial behavior by breaking it down into specific action units (AU). They are defined as contractions or relaxations of one or more facial muscles. The FACS manual has over 500 pages and describes all action units along with their meaning according to Ekman’s interpretation.

Measuring facial movement

Researchers can learn how to read and interpret the action units from manuals and workshops to manually code the facial actions, but nowadays, computers are getting better and better at recognizing and identifying the FACS codes. Let’s take a look at the main codes used for facial expression recognition:

| AU number | FACS name |

|---|---|

| 1 | Neutral face |

| 2 | Inner brow raiser |

| 3 | Outer brow raiser |

| 4 | Brow lowerer |

| 5 | Upper lid raiser |

| 6 | Cheek raiser |

| 7 | Lid tightener |

| 8 | Lips toward each other |

| 9 | Nose wrinkler |

| 10 | Upper lip raiser |

| 11 | Nasolabial deepener |

| 12 | Lip corner puller |

| 13 | Sharp lip puller |

| 14 | Dimpler |

| 15 | Lip corner depressor |

| 16 | Lower lip depressor |

| 17 | Chin raiser |

| 18 | Lip pucker |

| 19 | Tongue show |

| 20 | Lip stretcher |

| 21 | Neck tightener |

| 22 | Lip funneler |

| 23 | Lip tightener |

| 24 | Lip pressor |

| 25 | Lips part |

| 26 | Jaw drop |

| 27 | Mouth stretch |

| 28 | Lip suck |

Of course, there are many more FACS codes, like head movement codes, eye movement codes, visibility codes, or gross behavior codes. There can be different intensities for each of the codes. They are described using letters - from A (minimal intensity possible for the given person) to E (maximal intensity):

A - trace,

B - slight,

C - marked or pronounced,

D - severe or extreme,

E - maximum.

Sometimes there are additional letters added:

R - if the action happens only on the right side of the face,

L - if the action happens only on the left side of the face,

U - if the action occurs on only one side of the face but has no specific side,

A - if the action is bilateral but is stronger on one side of the face.

Knowing all of that, let’s take a look at some emotions decoded by the FACS:

| Emotion | Action units |

|---|---|

| Surprise | 1+2+5B+26 |

| Sadness | 1+4+15 |

| Happiness | 6+12 |

| Fear | 1+2+4+5+7+20+26 |

| Disgust | 9+15+17 |

| Contempt | R12A+R14A |

| Anger | 4+5+7+23 |

FACS provides all the facial action units responsible for a specific facial expression. Let’s take a look at the “surprise”. It consists of:

1 - neutral face,

2 - Inner brow raiser,

5B - slight upper lid raiser,

26 - jaw drop.

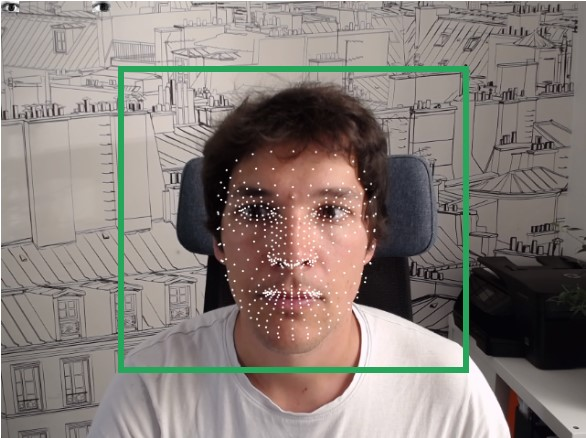

RealEye automated facial action coding feature

In RealEye, we also use the FACS (by Paul Ekman Group) to code emotions in our facial expression recognition system. There are three plots available:

happy - when cheeks are raising, and corners of the lip are pulling (a person is smiling),

surprise - when brows are raising, the upper lid is slightly rising, and the jaw is dropping,

neutral - lack of any face movement.

The data is captured with a 30 Hz sampling rate (samples are taken each 30 ms). All the facial motion data can be exported into the CSV file (values are normalized from 0 to 1).

You should also remember that talking during the study (i.e., for moderated sessions) can influence facial features, which means it influences emotion reading. We also enabled the option to run RealEye studies without enabling the facial coding feature.

Why don’t we provide more emotions?

We run a lot of internal tests and have a lot of discussions within the team. We also talk a lot with our customers to acknowledge their point of view. All of that leads us to the conclusion that the three emotions offered by our tool are the most common to observe and, at the same time, the most important.

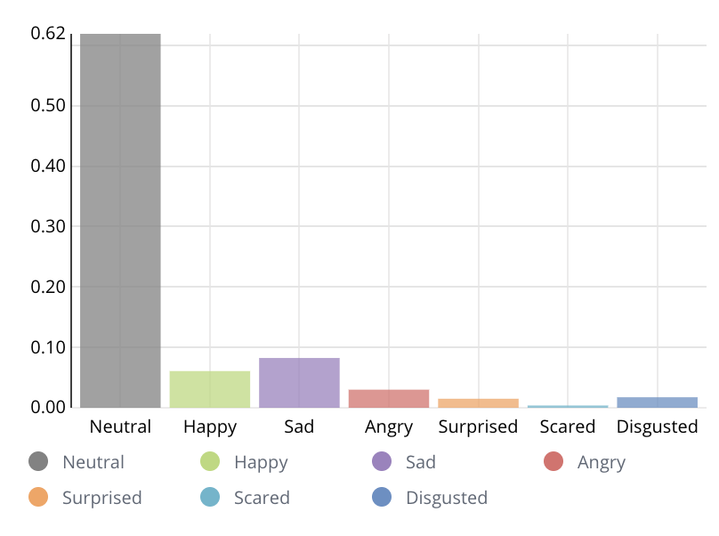

Before even implementing the FACS into our tool, we checked and tested several tools and platforms dedicated to facial coding (all used the FACS as well). We observed that the great majority of all the results showed only surprise and happiness - other emotions indicated almost no changes in their graphs at all. When sitting in front of the screen, people usually don’t show many emotions, especially anger, sadness, disgust, or fear. We haven’t seen the data of these emotions, no matter what the stimulus was. So, after further investigation, we realized that, we can’t guarantee that emotions other than happiness, surprise, neutrality, and sadness wouldn’t be only face reading errors, not actual emotions. It’s also worth noticing that the RealEye tool performs individual facial calibration for every participant, which isn’t a standard for the facial coding tools - that’s why they are decoding the sadness too often (like in the “resting face” case). To show that, here’s a plot showing emotions detected by one of the mentioned tools while showing the study participants an entertaining/neutral ad.

The sadness, in our opinion and based on our experience and tests, is caused by the resting face influence, which makes the results less trustworthy for us.

Considering all of that, to allow you to analyze facial expressions with a high level of confidence, we decided to add this feature to our tool but narrow the spectrum of detected emotions to only the ones that are most commonly used and that we are sure about to avoid any uncertain data (have the lowest error rate). From the technical point of view, we could extend our offer to capture sadness as well.