As AI makes its way into nearly every corner of media production, subtitling is no exception. But how do viewers actually experience these machine-generated subtitles? A recent peer-reviewed study, "Through the Eyes of the Viewer: The Cognitive Load of LLM-Generated vs. Professional Arabic Subtitles" by Hussein Abu-Rayyash and Isabel Lacruz (Kent State University), put that question to the test - using RealEye's web-based eye-tracking platform to measure the cognitive load of Arabic-speaking viewers watching AI- vs human-translated subtitles.

Here’s what the researchers discovered - and why it matters.

The study set out to compare GPT-4o-generated Arabic subtitles with professionally created human translations, measuring which type imposes more cognitive load on viewers. This matters for two key reasons:

Participants (82 native Arabic speakers) were shown the same 10-minute episode from the BBC comedy State of the Union. They were split into two groups:

RealEye was used to track viewers' gaze in real-time via standard webcams. This setup enabled the researchers to remotely and non-invasively collect accurate data on:

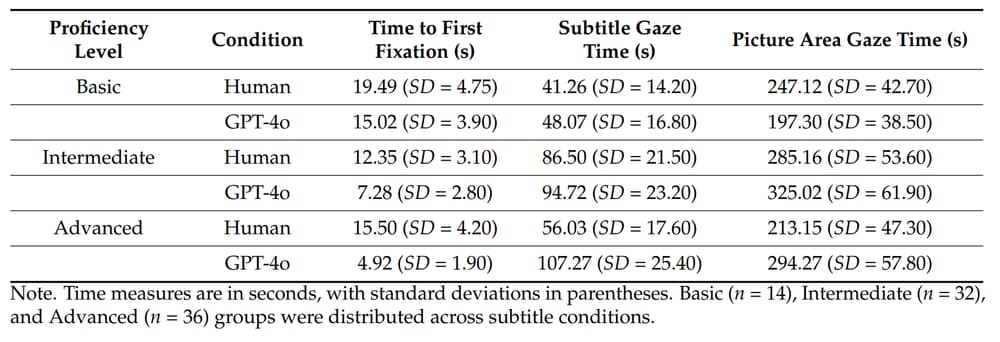

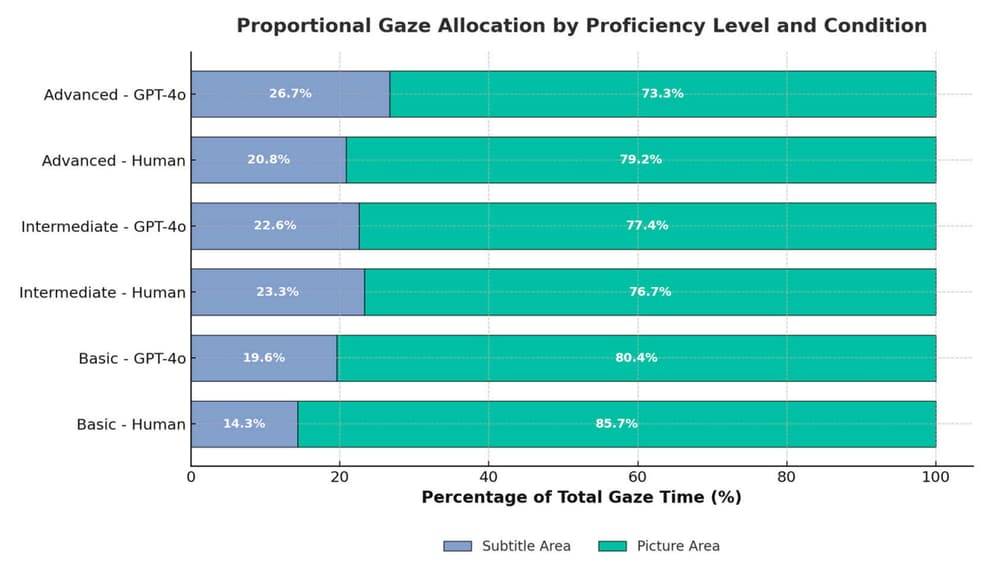

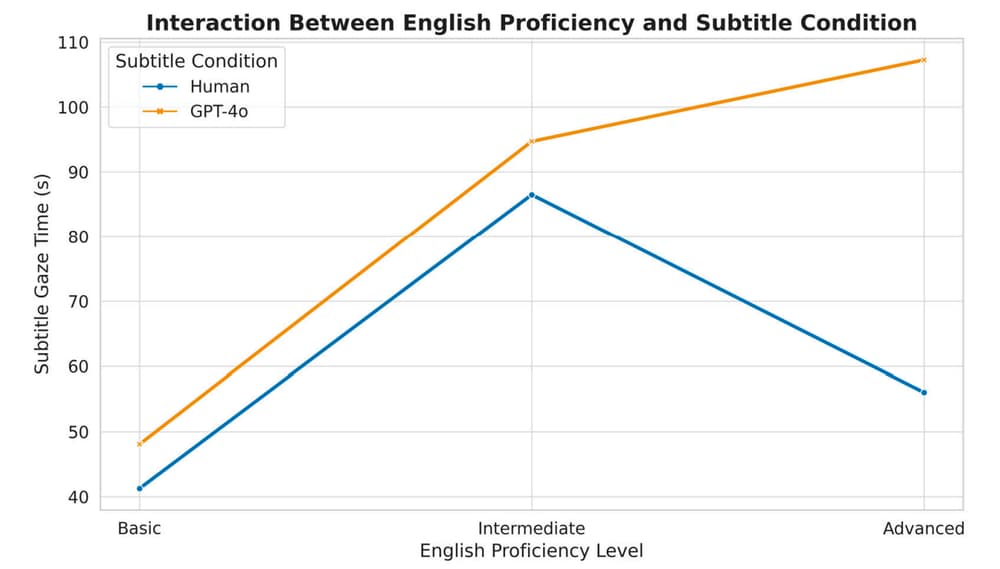

The data painted a clear picture: AI-generated subtitles caused significantly more cognitive strain.

In other words, GPT-4o subtitles didn’t just look readable - they made people work harder to read them.

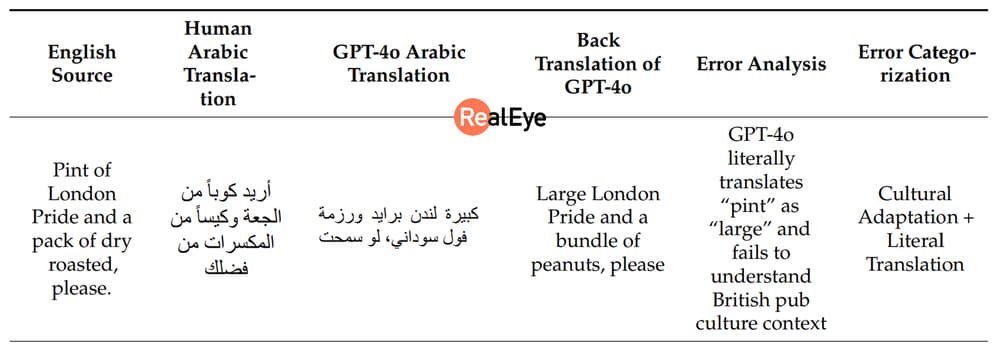

Despite fluent surface quality, GPT-4o struggled with:

These issues forced viewers to pause, reread, and mentally reconcile mismatched meanings - especially those with higher English proficiency, who were more sensitive to translation flaws.

One of the most striking results: the higher the viewer’s English proficiency, the greater the cognitive disruption from GPT-4o subtitles. Advanced users spotted errors more easily - and spent more time trying to make sense of them. Ironically, AI subtitles may be more frustrating for the people best equipped to understand them.

This study, is one of the first to offer quantitative evidence that LLM-generated subtitles, while fast, still carry a hidden cost: viewer effort. The findings emphasize that:

As AI-generated subtitles proliferate across streaming services and educational content, it's essential to ask: Are they actually helping - or are they silently straining our viewers?

This study shows that RealEye can reveal the subtle, invisible ways in which AI output impacts the user experience. It also reminds us that in translation, nuance matters - and the human eye knows it.

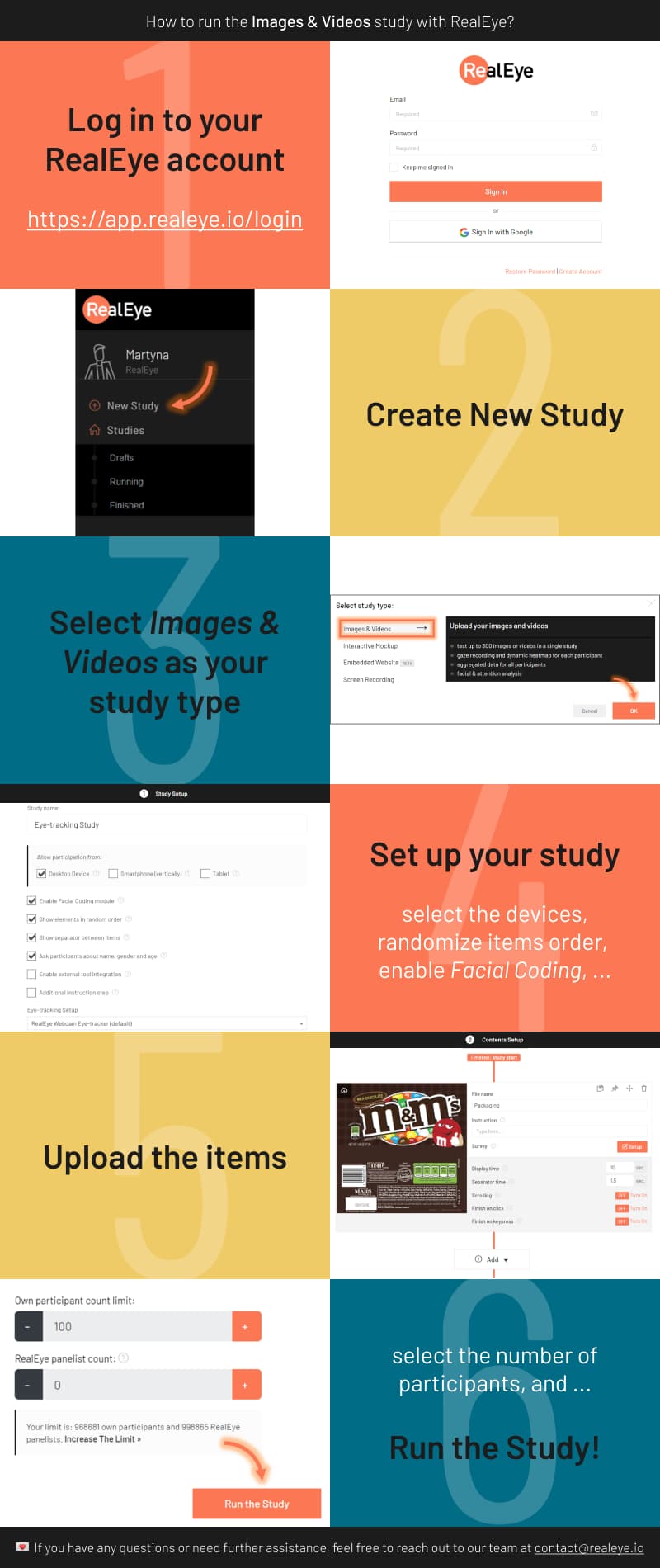

Follow the steps below to start your own experiment with RealEye:

Ready to set up your own study? Visit RealEye Support page to learn more and keep us posted on your results! 🚀